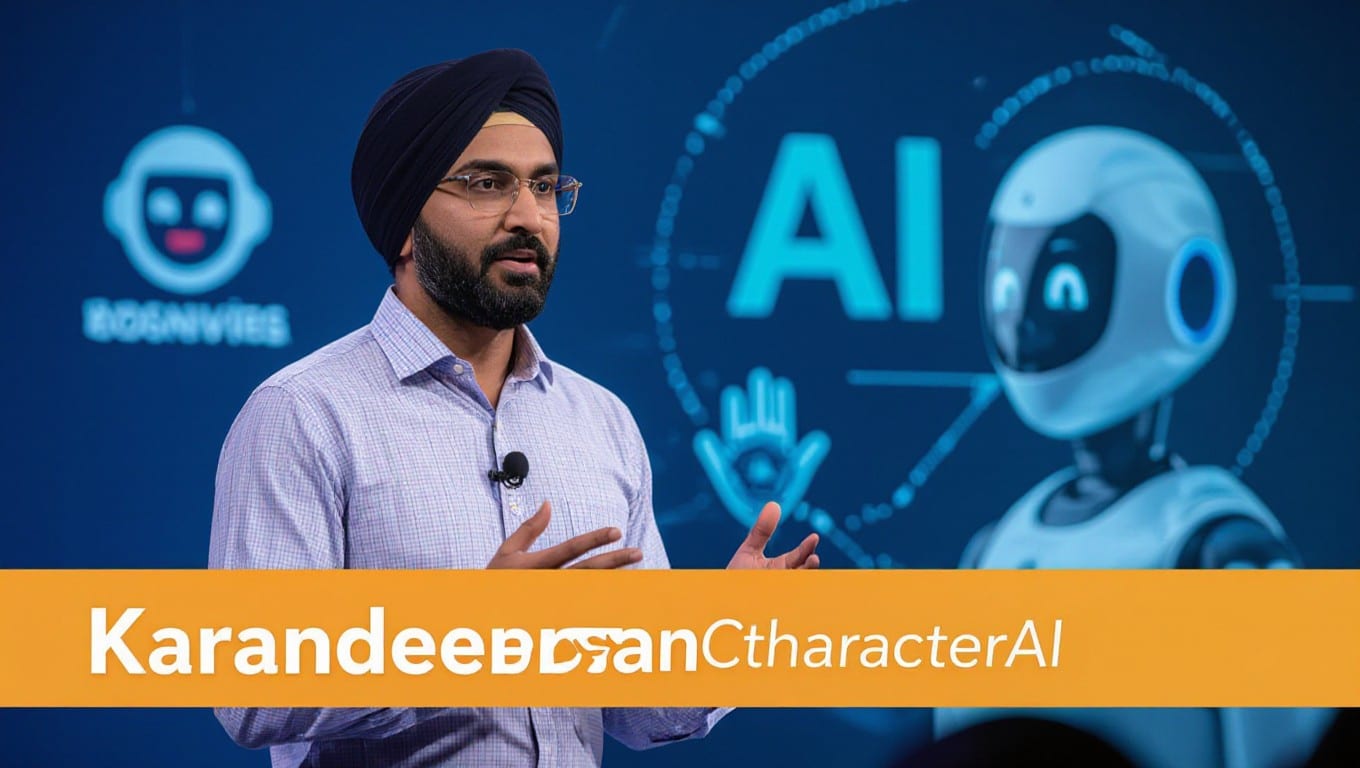

Kids today have unprecedented access to AI chatbots, but concerns about exposure to inappropriate content and safety risks have grown alongside this technology. As you explore Character.AI, its new CEO Karandeep Anand is actively working on enhancing safety measures like improved content filtering and parental controls to protect young users. At the same time, your experience can benefit from the platform’s unique approach to interactive storytelling and creative engagement, striking a balance between fun and security in this rapidly evolving space.

A New Era of AI Leadership

Karandeep Anand steps into Character.AI’s CEO role bringing a unique combination of deep tech expertise and personal insight, guiding the company through rising safety concerns and intense market rivalry. Balancing innovation with responsibility, he’s already focused on refining the platform’s safety features without stifling creativity, while leading a compact, passionate team that aims to redefine interactive AI entertainment. You can expect leadership that actively anticipates challenges—like abuse of new tools—and fosters a creator-driven ecosystem that leans into both enjoyable AI experiences and robust trust measures.

Karandeep Anand’s Vision for Character.AI

Anand envisions Character.AI as more than just another chatbot provider; he aims to transform it into an immersive, interactive entertainment platform where users co-create stories rather than passively consume content. Under his leadership, the emphasis is on developing AI characters that engage through rich conversational cues and diverse personas, forging new, meaningful social connections. By doubling down on both entertainment value and safety, Anand believes the platform can outpace competitors while carving out a distinct niche within the evolving AI landscape.

The Role of Personal Experience in Shaping Leadership

Drawing from his own parenting experience, Anand brings a grounded perspective to decisions on user safety and product design. His daily use of Character.AI with his daughter informs a leadership approach that prioritizes trust and protection alongside innovation. This personal connection allows him to better understand what families need from the platform—balancing child-friendly features with creative freedom—and shapes ongoing efforts to refine safeguarding mechanisms without creating an overly restrictive environment.

Addressing Safety Concerns: Innovations and Challenges

Character.AI has taken significant steps to strengthen its safety framework, balancing innovation with user protection. You’ll find new tools like pop-ups directing users toward helpful resources when sensitive topics arise and AI model adjustments that reduce exposure to suggestive content for minors. Still, the company is exploring ways to refine safety filters to better understand context, preventing unnecessary censorship in harmless conversations like creative role play. Continual testing of features, including the newly launched animated video tool, showcases an ongoing commitment to staying ahead of misuse while enhancing user experience.

Implementing New Safeguards for Youth

Updated AI models now tailor conversations for users under 18, limiting the chance they encounter inappropriate content. You can opt in to receive weekly parental reports detailing your teen’s interactions, providing increased transparency. The platform also integrates pop-ups that immediately connect users discussing self-harm or suicide to the National Suicide Prevention Lifeline. Despite these measures, Character.AI does not verify users’ ages during signup, which remains a significant challenge in enforcing safety protocols for younger users.

Navigating Legal Pressures and Public Scrutiny

Lawsuits by families alleging exposure of children to harmful content have placed intense legal pressure on Character.AI, spotlighting its responsibility for user safety. You’ll see that the company actively addresses these issues by evolving safety policies and technologies, but ongoing public and regulatory scrutiny demands constant vigilance. Lawmakers and advocacy groups push for tighter controls, especially for minors, while the company works to defend itself against claims and improve safeguards that could redefine industry standards.

Facing legal challenges stemming from tragic incidents, such as the 14-year-old Florida boy’s suicide linked to chatbot interactions, Character.AI has implemented new policies aimed at protecting vulnerable users. You can observe that these lawsuits compel the company to actively engage with regulators and advocacy organizations, fostering dialogue on safer AI companionship. The pressure extends beyond courts, as the platform navigates public opinion, media coverage, and advocacy group demands—all steering Character.AI’s strategic development toward more robust, transparent safety measures amid fierce competition.

Redefining User Interaction with AI

Character.AI is transforming how you engage with artificial intelligence by emphasizing dynamic, interactive storytelling over passive consumption. Rather than simply answering questions, its chatbots emulate human conversational nuances, including facial expressions and gestures, creating a more immersive experience. This approach encourages you to co-create narratives and role play, blurring the line between audience and creator. With avatars ranging from licensed therapists (with disclaimers) to fantasy characters, the platform is redefining AI as a personalized entertainment partner, making your interaction both lively and contextually rich.

Enhancing User Experience through Creative Engagement

You gain more than just chatbot conversations; you enter a world where your imagination shapes the experience. Character.AI invites you to build custom personas or look into interactions with AI models crafted after celebrities and fictional icons. This creative freedom turns every session into a unique collaboration, moving entertainment from passive scrolling to active participation. Anand emphasizes growing a new creator ecosystem, where your input influences narrative possibilities, fostering a deeper connection that keeps you engaged and entertained beyond typical AI chat applications.

Balancing Safety Filters with User Freedom

Character.AI’s evolving safety filters aim to protect you, especially young users, without stifling legitimate creative expression. The current model sometimes censors harmless content—like vampire-themed fan fiction references to blood—which Anand acknowledges and plans to refine. By improving contextual understanding, the platform seeks to loosen overly strict restrictions while maintaining robust safeguards against inappropriate or harmful material, striking a careful balance between trust and freedom.

The challenge lies in finely tuning these filters to distinguish between harmless fantasy and genuine risk. Character.AI uses a combination of AI model adjustments and user activity monitoring, such as weekly parental email updates for teens, to minimize exposure to sensitive content. Meanwhile, safeguards like pop-ups linking users mentioning self-harm to crisis resources exemplify proactive measures. Anand’s goal is a safety system that adapts intelligently, reducing false positives without opening doors to misuse, ensuring you can safely explore rich, immersive experiences without unnecessary interruptions.

Competing in a Crowded AI Marketplace

Character.AI navigates an increasingly saturated AI landscape, competing not just with giants like ChatGPT and Meta’s AI tools but also with emerging startups. You’ll notice the company differentiates itself with its specialty in diverse, personality-driven chatbots and an emphasis on interactive entertainment. The challenge lies in balancing innovation with safety, especially amid lawsuits and regulatory scrutiny. Keeping user trust while expanding features requires deft management of both technology and public perception.

Strategies for Attracting Creators and Users

Driving growth depends heavily on cultivating a robust creator ecosystem; Anand is focused on making it easier for you to build and share unique chatbot personas. Upgrading the social feed to showcase user-generated content encourages community engagement and creativity. This social dimension, inspired by Meta’s recent efforts, invites you to become an active participant rather than a passive consumer, fueling organic growth and deepening user attachment.

Managing Talent Retention Amid Industry Competition

Retention challenges ramp up as major players like Meta aggressively recruit AI experts with lucrative packages, sometimes worth hundreds of millions. Character.AI’s ability to hold onto talent relies on fostering a passionate, mission-driven culture you’ll find motivating and rewarding, contrasting the impersonal allure of big-budget rivals. Anand’s leadership stresses the unique purpose behind the company’s work, which helps keep the team committed despite offers elsewhere.

The Ethical Landscape of AI Companionship

Balancing the promise of AI companionship with the risks it presents demands ongoing vigilance. You’re navigating a world where chatbots can simulate empathy and intimacy, but also inadvertently foster unhealthy emotional dependencies or expose vulnerable users to inappropriate interactions. Character.AI aims to fine-tune its safeguards, offering a platform that encourages creativity and fun while actively mitigating harm through dynamic content filtering and user education — particularly as the lines between entertainment and emotional support continue to blur.

Understanding the Psychological Effects on Young Users

Young users engaging with AI characters may experience blurred boundaries between reality and fiction, leading to attachment or emotional reliance on digital personas. Instances reported by families highlight how suggestive or self-harm-related content can intensify vulnerabilities. You should be aware that under-18 users often face a complex emotional landscape, and AI companions can unwittingly magnify these challenges despite safety filters designed to reduce exposure to sensitive material.

Advocating for Responsible AI Use

Promoting responsible use involves clear communication about AI limitations and realistic expectations, especially for younger audiences and their caregivers. Character.AI’s weekly parental activity reports and suicide prevention prompts are examples of practical steps to empower you in overseeing safe interactions, while efforts to evolve safety filters aim to reduce over-censorship without compromising protection.

In addition to technical measures, responsible AI use calls for fostering digital literacy around AI companionship. You benefit when platforms like Character.AI invest in transparency, educating users on the distinction between AI personas and real human connections. This includes clarifying chatbots’ non-professional status, discouraging reliance on AI for mental health support, and creating community standards that discourage exploitative content. Such advocacy helps build a safer environment where users can enjoy AI innovation without unintended psychological risks.

To wrap up

Now, as you consider the challenges of kids using chatbots, Character.AI’s new CEO Karandeep Anand is focused on enhancing safety without compromising user experience. You can expect ongoing improvements in AI moderation, transparent parental controls, and smarter content filters that better understand context. Anand aims to create a safer environment for young users while encouraging creativity and interaction. By balancing trust, safety, and entertainment, the platform intends to offer you a more engaging and responsible AI experience.